Hello! I’m cw-ozaki, and I work in the SRE department.

Continuing on from part three, I’d like to consider how to migrate applications to new clusters in line with the Kubernetes cluster update strategy in this installment.

- Simple summary of the last article

- Current framework at Chatwork

- 1. Route53 -> CloudFront

- 2. CloudFront -> ALB

- 3. ALB Target Group

- 4. Kubernetes

- How do you create ALB and Route53 weighted records?

- Summary

Simple summary of the last article

The Kubernetes cluster at Chatwork is operated according to the following principles.

- Multi-tenant, single cluster

- Use Blue/Green to upgrade clusters, in each upgrade, we build brand-new a cluster

- Upgrade roughly once every three months

These policy points are regulated for application development as well.

For state-less applications, the issue is how well transmissions from clients can be sorted into new and old. For state-full applications, there is a need to consider other aspects such as state migration matching this classification, and if the application is restricted to only operating as a single unit, methods for resolving that must also be considered.

If resolving this restriction is impossible, please give up on moving to Kubernetes and use a different format such as EC2 instead.

In addition, so there are updates once every three months, the amount of work for each update operation must be minimized.

For example, if preparing and applying migration can take weeks, accordingly, depending on the work status of the team members at migration time, they may be unable to take on heavy tasks like that, making the update impossible.

Current framework at Chatwork

Fortunately, one web portion of our PHP legend system (the chat screen that all of you use) is a state-less web application.

Using a Cloud-Native web application for this purpose is a common structure right now.

If you’re not using a state-less web application in a format like this yet, removing the state can be the first step.

Since the web portion is a state-less application, migration to a new cluster requires user requests to be routed from the old cluster to a new cluster. There are four different positions where routing can be performed.

- Route53 -> CloudFront

- CloudFront -> ALB

- ALB Target Group

- Kubernetes

The following is an explanation of the method for routing requests using each of these positions.

1. Route53 -> CloudFront

Requests cannot be routed using the Route53 weighted records directly connected to CloudFront. This is a limitation of CloudFront where you can not create more than one distribution for the same domain.

For this reason, a resource further back must be used to route user requests.

2. CloudFront -> ALB

By switching the CloudFront origin, requests to multiple ALB can be switched.

However, switching can only be performed between 0/1 new and old clusters, and since this is a CloudFront update operation it takes a considerable amount of time.

To complete the switch more flexibly and quickly, create a Route53 weighted record to resolve the ALB DNS.

By setting the CloudFront origin to this weighted record, the CloudFront origin communication destination can be controlled by simply modifying the weighting of the weighted record.

Since this method depends on the DNS name resolution on the CloudFront servers, there’s no need for concern regarding aspects such as the user DNS Cache, and it has the benefit of extremely rapid switching of the weighting values.

However, one negative aspect of it is that larger numbers of requests than expected can be assigned in some cases, possibly because of its dependence on the DNS name resolution on the CloudFront servers. (For example, 50% of requests assigned for 10% weighting.)

This is most likely due to name resolution being carried out in a fixed interval on the CloudFront side, causing requests to be sent continuously to the ALB resolved during this interval. For this reason, rather than using automatic scaling for the targeting, a certain number of machines must be prepared in advance to operate some amount of requests when using this method.

3. ALB Target Group

This function was not released at the time of selection, but if we start the selection now, it would be a leading candidate.

By setting multiple Target Groups with weighting for each on ALB, request routing can be achieved.

Since we haven't used this functionality yet, the details are unclear. However, we expect it’s faster than DNS-based routing and it routes requests in accordance with their weighting, so it seems like a good option.

4. Kubernetes

By forming a multi-cluster network in the Kubernetes layer using Istio, or by using NGINX Ingress, the upstream can be switched to a new cluster. However, this often tends to be a large-scale operation, and it also makes it easier for excess AZ-crossing communications to occur, so the disadvantages stand out when compared to methods #2 and #3.

However, since there is a desire to bring some buzz to multi-cluster networking within our company, someone like cw-sakamoto may end up writing about installing AWS AppMesh or something like that.

How do you create ALB and Route53 weighted records?

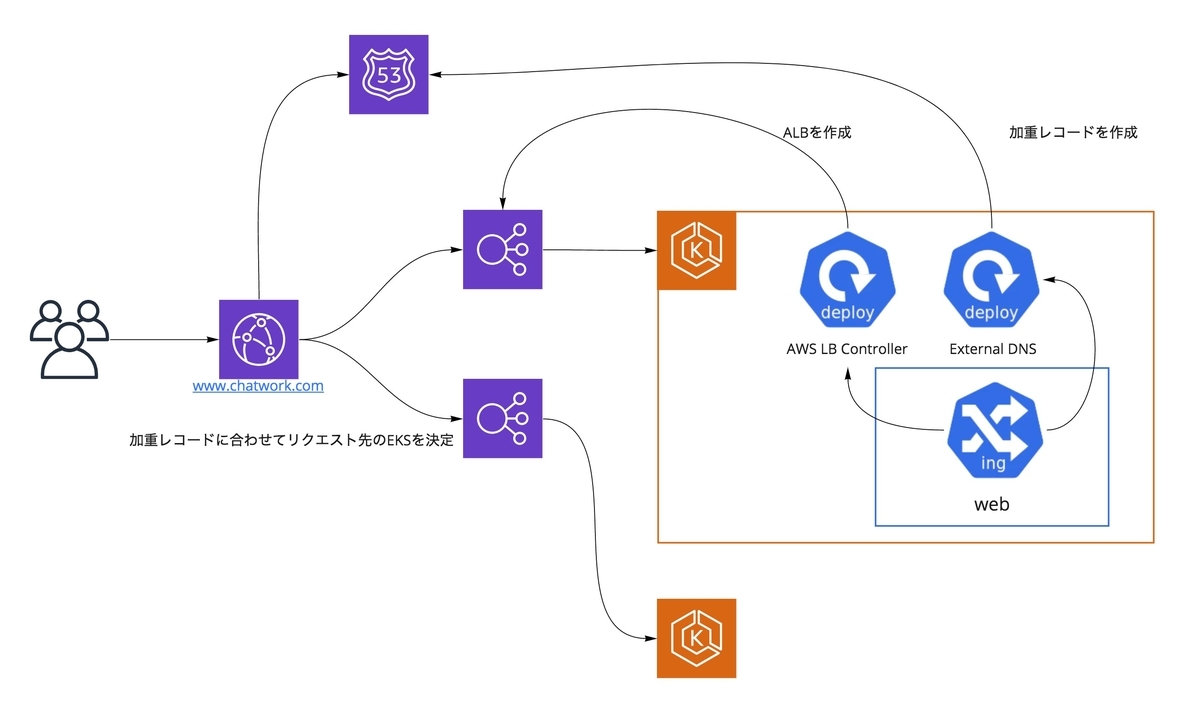

So, 2. CloudFront -> ALB is the only option left.

At this time, ALB and Route53 weighted records need to be created when creating new clusters, but to be honest, I really don’t want to go to this kind of manual operation once every three months.

In this situation, aws-load-balancer-controller (formerly: alb-ingress-controller) and external-dns come in handy.

These are convenient tools that can be used to automatically generate ALB and Route53 weighted records by assigning annotations in Kubernetes.

annotations: kubernetes.io/ingress.class: alb external-dns.alpha.kubernetes.io/hostname: [The DNS name CloudFront uses for origin resolution.] external-dns.alpha.kubernetes.io/set-identifier: new-cluster-name external-dns.alpha.kubernetes.io/aws-weight: "0"

By setting things up like this, creating a new Kubernetes cluster, and deploying the application, all that’s left to do is change the Route53 weighting or change the external-dns.alpha.kubernetes.io/aws-weight annotations, and the application migration can be completed. (The method here differs depending on the external-dns policy.)

One thing to make sure of is that external-dns.alpha.kubernetes.io/aws-weight is set to 0, since deploying the application will create a weighted record.

For example, if this is 100 and the weighted record for the old cluster is also 100, 50% of the requests will be suddenly routed to the new cluster. To prevent requests from being routed unexpectedly, be careful to make sure this value is set to 0 when deploying to a new cluster.

This is a bit off-topic, but aws-load-balancer-controller also supports TargetGroup-only creation, so I think choosing 3. ALB Target Group for routing is also an option.

The external-dns is unnecessary with this method, so there’s a possibility it could be a faster way of switching routing than the DNS-based approach. If this can be verified and seems to work down the line, we might move in this direction.

Summary

- Chatwork is a state-less web application!

- Request routing for new and old clusters is controlled by Route53 weighted records using CloudFront and multiple ALB!

- Aws-load-balancer-controller and external-dns are god-like.

So, this concludes our discussion of application migration tactics tailored to the Kubernetes cluster update strategy in Chatwork. Next, we’ll talk about the container design of PHP applications. However, since there is some overlap with the content of PHP Conference 2020 Re:born, the next installment won’t be posted until after the PHP Conference event. “I want to find out sooner!” If that’s what you’re thinking, please watch “PHP Conference 2020.”